The Surging Power Demand of the Digital Age

Energy efficient data centers are critical infrastructure that optimize power consumption through advanced IT equipment, streamlined cooling systems, and intelligent power distribution to minimize waste while maintaining high performance.

Key strategies for improving data center efficiency:

- Optimize IT Equipment – Virtualize servers, consolidate workloads, and enable power management features

- Upgrade Cooling Systems – Implement hot/cold aisle containment, free cooling, or liquid cooling for high-density racks

- Streamline Power Infrastructure – Use high-efficiency UPS systems in eco-mode and smart PDUs

- Measure Performance – Track Power Usage Effectiveness (PUE) and target values below 1.5

- Automate Management – Deploy DCIM software for real-time monitoring and optimization

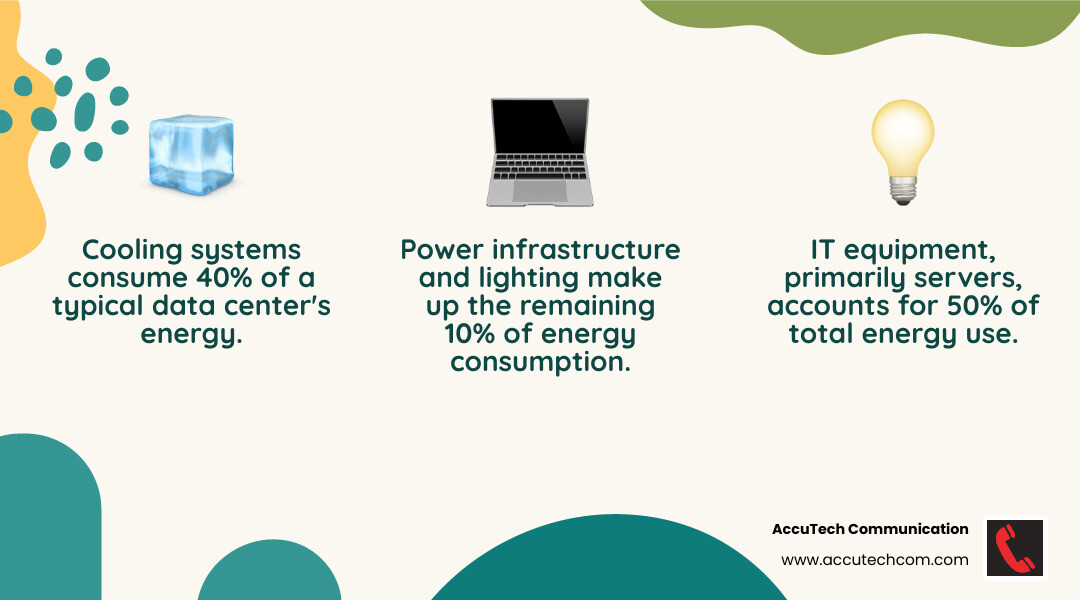

Most people don’t think about the massive amounts of electricity consumed by the digital services they use every day. Yet data centers could account for up to 10% of total global electricity demand growth by 2030, driven largely by AI and cloud computing expansion. With cooling systems alone representing 40% of energy consumption in typical facilities, the urgency to improve efficiency has never been greater.

The good news? Even simple measures can yield significant returns. Inexpensive airflow management improvements can save a large data center $360,000 annually, while containment systems can reduce energy expenses by 5-10%. The challenge is knowing where to start and which strategies will deliver the best results for your specific infrastructure.

I’m Corin Dolan, owner of AccuTech Communications, and I’ve spent over 30 years helping commercial clients across Massachusetts, New Hampshire, and Rhode Island build and maintain robust communication infrastructure, including implementing energy efficient data centers that balance performance with sustainability. Throughout this guide, we’ll explore the proven strategies that can transform your facility from an energy drain into a green giant.

Common energy efficient data centers vocab:

Foundational Strategies for Energy Efficient Data Centers

Achieving energy efficient data centers requires a multi-faceted approach, starting with IT equipment, power infrastructure, and performance metrics. These foundational strategies lay the groundwork for a leaner, greener, and more resilient data center.

Optimizing IT Equipment and Workloads

The IT equipment itself is often the largest consumer of energy in a data center, accounting for roughly 50% of total power. Therefore, optimizing these components and how workloads are managed is paramount for energy efficiency.

- Server Virtualization and Consolidation: A common culprit of energy waste is underused physical servers. Most servers aren’t running anywhere near capacity, yet an idle server can still consume about 60% of its peak power. We can consolidate multiple lightly-used physical servers onto fewer, more powerful virtual machines. This reduces the number of physical machines running, saving energy, operating system licenses, and hardware maintenance costs. Consolidating 100 physical servers at 15% utilization onto 20 servers at 75% utilization dramatically cuts energy consumption.

- Energy-Efficient Hardware: Older hardware can be a significant drain. A five-year-old server, for instance, can use twice the power of a new one while delivering half the performance. Regularly refreshing hardware with newer, more energy-efficient models that incorporate advancements in chip design and power management is a wise investment. We always recommend evaluating the latest generations of servers, storage, and networking equipment for their power-per-performance ratios.

- Power Management Features: Modern servers come with built-in power management features, such as processor power-saving modes, that often need to be activated. These features dynamically adjust power consumption based on workload, scaling down during periods of low utilization. Ensuring these are properly configured can yield substantial savings.

- Workload Scheduling and Software Optimization: Intelligent workload scheduling can move tasks to fewer servers during off-peak hours, allowing other servers to enter low-power states or be shut down. Software optimization, including data deduplication and compression, reduces the amount of data stored and processed, lowering storage and server energy consumption. Deduplication software can reduce stored data by over 95%.

- Decommissioning Ghost Servers: Servers that are powered on but performing no useful work (“ghost servers”) are pure energy waste. Regularly auditing your IT environment to identify and decommission these dormant machines is a simple yet effective step.

For effective deployment and management of your IT infrastructure, consider our expertise in data center installation complete guide.

Streamlining the Power and Electrical Infrastructure

Beyond the IT equipment, the electrical infrastructure supporting the data center plays a crucial role in overall energy efficiency. Power conversion losses, inefficiencies in power delivery, and outdated components can all contribute to significant energy waste.

- High-Efficiency UPS Systems: Uninterruptible Power Supply (UPS) systems are essential for maintaining uptime but can be a source of inefficiency. We look for UPS systems with high efficiency ratings, especially at typical operating loads, and use features like “eco-mode” or “energy-saver mode” when appropriate. Running UPS systems in eco-mode can reduce data center energy costs by as much as 2%. Properly loading UPS systems also improves their efficiency.

- Efficient Power Distribution Units (PDUs): High-efficiency PDUs are 2 to 3 percent more efficient than conventional units. While this might seem small, these incremental gains add up significantly over time in a large facility. Smart PDUs also offer monitoring capabilities that can help identify power usage patterns and areas for optimization.

- Right-Sizing Infrastructure: Over-provisioning power capacity means running equipment that is larger and less efficient than necessary for the current load. We work with clients in Massachusetts, New Hampshire, and Rhode Island to right-size their power infrastructure, ensuring it matches current and projected IT loads without excessive headroom. This avoids unnecessary losses from underused components.

- Direct Current (DC) Power: While still less common, some advanced data centers are exploring DC power distribution directly to IT equipment, which can eliminate several AC-to-DC conversion steps, reducing energy losses.

- Minimizing Conversion Losses: Every time power is converted (e.g., AC to DC, voltage changes), some energy is lost as heat. Optimizing the power chain to reduce the number of conversions and using highly efficient transformers and power supplies can significantly improve overall efficiency.

Measuring Success: Key Performance Indicators for energy efficient data centers

Key Performance Indicators (KPIs) are vital for understanding your data center’s energy footprint, identifying areas for improvement, and tracking progress toward greater efficiency.

- Power Usage Effectiveness (PUE): PUE is the most widely adopted metric for data center energy efficiency. It is calculated by dividing the total power entering the data center by the power consumed by the IT equipment. A PUE of 1.0 is theoretically ideal, meaning all power goes directly to IT. The average PUE of data centers in the United States is 1.51. Modern purpose-built facilities should target a PUE of 1.5 or better, with leading-edge designs achieving 1.3 to 1.4.

- Data Center Infrastructure Efficiency (DCiE): DCiE is simply the inverse of PUE, expressed as a percentage (IT Equipment Power / Total Facility Power x 100%). A higher DCiE indicates better efficiency.

- Carbon Usage Effectiveness (CUE): CUE measures the total greenhouse gas emissions associated with the data center’s energy consumption. It provides a more complete picture of environmental impact than PUE alone, especially when considering the energy source (e.g., fossil fuels vs. renewables).

- Water Usage Effectiveness (WUE): WUE quantifies the amount of water used by the data center for cooling and humidification per unit of IT equipment energy. As water scarcity becomes a growing concern, especially in densely populated areas like parts of Massachusetts, managing water consumption is increasingly important.

- PUE Calculation and Benchmarking: Consistently applying PUE calculation methodologies is crucial, as minor changes can affect results. Some organizations now include energy from substations, transformers, and water treatment plants for a more comprehensive view. Benchmarking your PUE against industry averages and best-in-class facilities (with PUEs below 1.2) helps set realistic goals. Continuous monitoring and periodic reviews of these KPIs are essential for sustained energy efficiency.

Mastering Thermal Management: Advanced Cooling Techniques

Cooling systems represent the largest energy consumption outside of IT equipment, often accounting for about 40% of a data center’s total energy use. Effectively managing the heat generated by IT equipment is critical for both energy efficiency and hardware longevity. This is where advanced thermal management techniques come into play.

The Cornerstone of Cooling: Airflow Management Best Practices

Poor airflow management leads to hot spots, requiring over-cooling of the entire data center and wasting significant energy. Simple, inexpensive measures can dramatically improve efficiency.

- Hot Aisle/Cold Aisle Configuration: We arrange server racks in rows so that the fronts face each other (creating cold aisles) and the backs face each other (creating hot aisles). This fundamental layout prevents the mixing of hot exhaust air with cool supply air.

- Aisle Containment Strategies: Taking hot aisle/cold aisle further, containment systems (either hot aisle containment systems (HACS) or cold aisle containment systems (CACS)) use physical barriers like curtains or panels to fully separate hot and cold air streams. This significantly reduces cold air bypass and hot air recirculation. Containment systems in hot/cold aisle arrangements can reduce energy expense by 5% to 10%. When used in combination with containment, fan energy use can be reduced by 20% to 25%.

- Blanking Panels: Unused spaces in server racks allow hot exhaust air to recirculate into the cold aisle. Installing blanking panels in these empty rack units prevents this mixing, ensuring that all cool air is directed through the equipment.

- Sealing Cable Openings: Any gaps in the raised floor or around cable entries can allow conditioned air to escape or unconditioned air to enter, disrupting proper airflow. Sealing these openings ensures that cool air reaches its intended destination.

- Raised Floor Plenums: For data centers utilizing raised floors, maintaining a clean and unobstructed plenum beneath the floor is crucial for efficient air distribution. The height of the raised floor also impacts airflow; deeper plenums (36 inches or more) generally improve distribution.

- Variable Speed Fans: We equip Computer Room Air Conditioners (CRACs) and Computer Room Air Handlers (CRAHs) with variable speed fans. These adjust fan speed based on actual cooling demand, consuming less energy when full capacity isn’t needed.

- Preventing Air Mixing: The overarching goal of all airflow management practices is to prevent the mixing of hot and cold air. This ensures that cooling systems operate efficiently, delivering cool air only where it’s needed and removing hot air effectively. Inexpensive air flow management measures can save a large data center $360,000 annually.

Leveraging the Environment: Passive and Free Cooling Strategies

Passive and free cooling strategies harness natural environmental conditions to reduce or eliminate the need for mechanical refrigeration, offering substantial energy savings, especially in climates like Massachusetts, New Hampshire, and Rhode Island, which experience distinct cold seasons.

- Passive Building Design: While often overlooked for data centers, passive design principles can significantly reduce the overall thermal load. This includes strategic site selection to leverage natural shade or prevailing winds, optimizing building shape and orientation, using high-performance insulation for walls and roofs, and incorporating smart glazing to minimize solar heat gain. For instance, the Yahoo “Chicken Coop” data center was inspired by the design of chicken coops, utilizing natural convection for heat discharge and direct air-side economizers for cooling, achieving an impressive PUE of 1.08.

- Air-Side Economizers: These systems use outdoor air to cool the data center when ambient temperatures are low enough. Instead of running energy-intensive chillers, outside air is filtered and brought directly into the data center. NetApp’s data center, for example, reduced cooling system energy usage by nearly 90 percent by using an air-side economizer. However, careful consideration of outdoor air quality and humidity is essential.

- Water-Side Economizers: When outdoor temperatures allow, water-side economizers use a cooling tower to produce chilled water directly, bypassing the mechanical chiller. This can reduce the cost of chilled water by up to 70%. This strategy is particularly effective in regions with consistently cool or cold ambient wet-bulb temperatures.

- Heat Pipes and Thermosiphons: These passive heat transfer devices can move heat away from IT equipment to the cooler exterior environment without mechanical pumps or compressors. They rely on the phase change of a working fluid within a sealed tube. Thermosiphons, for example, can achieve energy savings of 18.7% to 48.6%.

- Benefits and Challenges: Free cooling technologies can achieve an average PUE of 1.5-1.6 and an average Energy Saving Rate (ESR) of 25%-30%. The primary benefit is a drastic reduction in energy consumption for cooling. The main challenge is their dependency on climate. While highly effective in cooler months across New England, mechanical cooling will still be required during warmer periods. Water-side economizers also require careful management of water quality and consumption.

For a deeper dive into these and other techniques, we recommend exploring “Towards energy-efficient data centers: A comprehensive review of passive and active cooling strategies“.

The Future is Fluid: The Rise of Liquid Cooling

As computing density increases, especially with demanding AI workloads and High-Performance Computing (HPC), traditional air cooling struggles to keep pace. This is where liquid cooling technologies shine, offering superior heat removal capabilities and significantly higher energy efficiency.

| Cooling Method | Average PUE | Average ESR | Ideal Use Case | Pros | Cons |

|---|---|---|---|---|---|

| Air Cooling | 1.4-1.5 | 15-20% | Low-density racks (<5 kW/rack) | Widely adopted, lower upfront cost | Less efficient for high density, higher fan energy |

| Free Cooling | 1.5-1.6 | 25-30% | Regions with cool climates | Low operating cost, environmentally friendly | Climate dependent, potential air quality issues |

| Liquid Cooling | 1.1-1.2 | 45-50% | High-density racks (>20 kW/rack), AI/HPC | High efficiency, supports extreme densities | Higher upfront cost, specialized infrastructure |

Table: Comparison of Air, Free, and Liquid Cooling

- High-Density Racks and AI Workloads: Modern AI deployments can exceed 80 kilowatts per cabinet. Air cooling systems would struggle to dissipate this much heat effectively, leading to hot spots and potential equipment failure. Liquid cooling, with its much higher heat transfer coefficient, is perfectly suited for these extreme densities.

- Direct-to-Chip Cooling: This method involves circulating liquid directly over the hottest components, such as CPUs and GPUs, through cold plates mounted on the chips. This captures heat at its source, preventing it from entering the data center air and reducing the load on traditional air conditioning.

- Single-Phase Immersion Cooling: In this technique, IT equipment (servers, storage, networking gear) is submerged in a non-conductive dielectric fluid. The fluid absorbs heat from the components and is then circulated to a heat exchanger. This eliminates the need for server fans and traditional CRACs, leading to significantly lower energy consumption.

- Two-Phase Immersion Cooling: This is an even more efficient form of immersion cooling where the dielectric fluid boils at a low temperature, directly absorbing heat through latent heat of vaporization. The vapor then rises, condenses on a coil, and drips back down, creating a continuous cooling cycle. Two-phase cooling technologies can achieve an average PUE of 1.5-1.6 and an average ESR of 35%-40%.

- Energy Saving Rate (ESR) and PUE Reduction: Liquid cooling technologies can achieve an average PUE of 1.1-1.2 and an average ESR of 45%-50%. This is a dramatic improvement over air-cooled systems. The Microsoft Project Natick, an experimental underwater data center, achieved a PUE of 1.07 by leveraging the cold seawater for cooling, showcasing the potential of liquid-based solutions.

Liquid cooling is a game-changer for high-power-density environments, offering superior thermal performance and open uping new levels of energy efficiency. For advanced data center build out and data center migration projects, we evaluate the feasibility of integrating these cutting-edge cooling solutions.

Intelligent Management and Future Innovations

The journey toward truly energy efficient data centers doesn’t end with optimized hardware and cooling systems. It extends into how we manage, monitor, and predict energy consumption, leveraging automation and looking ahead to integrate with broader energy ecosystems.

Automating Efficiency with Data Center Infrastructure Management (DCIM)

Data Center Infrastructure Management (DCIM) software is the brain behind efficient data center operations. It provides a unified platform to monitor and manage all aspects of your physical infrastructure, from power and cooling to IT equipment.

- Real-time Monitoring: DCIM continuously collects data from sensors across your data center – power consumption at the rack, server, and component level; temperature and humidity at various points; airflow rates; and cooling system performance. This real-time visibility is crucial for identifying inefficiencies.

- Predictive Analytics: By analyzing historical and real-time data, DCIM can predict potential issues like hot spots, power capacity limits, or cooling system failures before they occur. It can also forecast future energy demand based on IT workload projections.

- Asset Management: DCIM tracks every asset in the data center, including its location, power consumption, and cooling requirements. This enables efficient capacity planning and ensures that resources are allocated optimally.

- Capacity Planning: With accurate data on power and cooling capacity, DCIM helps prevent over-provisioning (which wastes energy) and under-provisioning (which risks downtime). It ensures that new IT equipment is deployed in locations with adequate power and cooling.

- AI and Machine Learning: Many DCIM solutions now integrate AI and machine learning algorithms. These can analyze vast amounts of sensor data to optimize cooling and power management in real-time. Predictive algorithms anticipate cooling needs before temperatures rise, preventing energy waste from over-cooling. Machine learning can also detect subtle equipment performance changes that indicate developing problems, enabling predictive maintenance that prevents efficiency degradation. DCIM can reduce energy costs by as much as 30%.

- Improving Operational Reliability: By providing insights into infrastructure performance and predicting potential issues, DCIM improves the overall reliability of the data center, reducing the risk of costly downtime.

For businesses in Massachusetts, New Hampshire, and Rhode Island seeking to implement or upgrade their DCIM capabilities, our expertise in data center infrastructure management can help you harness these powerful tools.

What’s Next for Energy Efficient Data Centers?

The drive for energy efficient data centers is constantly evolving, with several exciting trends and research directions shaping the future.

- Waste Heat Reuse: Data centers generate immense amounts of waste heat. Instead of simply expelling it into the atmosphere, innovative facilities are capturing this heat for beneficial reuse. This could include warming nearby buildings, greenhouses, or even feeding into district heating systems. This concept of “sector coupling” transforms a waste product into a valuable resource, moving towards a circular economy model.

- Renewable Energy Integration: Powering data centers with 100% renewable energy is a growing goal. This involves direct integration with on-site solar or wind farms, or through power purchase agreements (PPAs) that ensure renewable energy sources supply the data center’s electricity. For areas like New England, with growing renewable energy infrastructure, this offers a clear path to reducing carbon footprint.

- Edge Computing: As data processing moves closer to the source of data generation (edge computing), smaller, distributed data centers will become more prevalent. Optimizing energy efficiency for these smaller, often remote, facilities presents unique challenges and opportunities.

- Government Initiatives: Programs like the Federal Energy Management Program (FEMP) encourage agencies and organizations to improve data center energy efficiency, providing resources through its Center of Expertise for Energy Efficiency in Data Centers and encouraging participation in initiatives like the Better Buildings Challenge. These programs drive innovation and adoption of best practices.

- Smart Grid Interaction: Future data centers will increasingly interact with the smart grid, adjusting their energy consumption based on grid conditions, renewable energy availability, and electricity prices. This demand response capability can help stabilize the grid and maximize the use of clean energy.

- Advanced Materials: Research into new materials for cooling, such as advanced phase change materials (PCMs) and nanofluids, continues to push the boundaries of heat transfer efficiency.

These future trends highlight a holistic approach where data centers are not just consumers of energy but active participants in a sustainable energy ecosystem.

Frequently Asked Questions about Data Center Energy Efficiency

What are the primary drivers of increasing energy consumption in data centers?

The primary drivers are the exponential growth of data, the increasing adoption of cloud computing, and the surging demand for AI and machine learning workloads. AI, in particular, is expected to drive a 165% increase in data center power demand by 2030, as high-density computing becomes standard. This leads to more IT equipment, higher power densities per rack, and consequently, greater cooling requirements.

What are the main categories of energy efficiency measures for data centers?

Energy efficiency measures typically fall into five main categories:

- IT Equipment Optimization: Server virtualization, hardware refresh, power management features, workload scheduling.

- Power Infrastructure Efficiency: High-efficiency UPS and PDUs, right-sizing, minimizing conversion losses.

- Airflow Management: Hot/cold aisle containment, blanking panels, sealing gaps.

- HVAC Optimization: Free cooling (air-side, water-side economizers), variable speed fans, higher temperature set points.

- Intelligent Management & Automation: DCIM, AI/ML for real-time optimization, waste heat recovery, renewable energy integration.

What is a good PUE for a data center?

A PUE of 1.0 is the theoretical ideal. Hyperscale data centers can achieve PUEs below 1.2, while the average for U.S. data centers is around 1.51. Any reduction towards 1.0 indicates significant efficiency gains. Our goal for clients across Massachusetts, New Hampshire, and Rhode Island is always to optimize their PUE as much as possible, balancing efficiency with reliability.

Is liquid cooling always better than air cooling?

Not always. Liquid cooling is superior for high-density racks (over 20kW) and AI-driven hardware that generate immense heat, where it offers significantly lower PUEs (around 1.1-1.2) and higher Energy Saving Rates (45-50%). For lower-density environments, optimized air cooling with effective airflow management can still be a cost-effective and efficient solution. The choice depends on the specific heat load, rack density, and budget.

How much can be saved with airflow management?

Simple, inexpensive measures like installing blanking panels and implementing aisle containment can reduce energy expenses by 5-10% or more. For a large data center, this can translate to hundreds of thousands of dollars in annual savings. One large data center, for example, saved $360,000 annually through inexpensive airflow management measures.

Conclusion: Building a Sustainable Digital Future in New England

The digital age demands more from our data centers than ever before. With the explosive growth of data and the insatiable appetite of AI, the energy consumption of these critical facilities is soaring. This presents both a challenge and a tremendous opportunity to innovate and build more sustainable infrastructure.

Achieving energy efficient data centers requires a holistic strategy that encompasses every layer of your operation. From optimizing individual IT components and streamlining the power infrastructure to mastering advanced cooling techniques and leveraging intelligent management systems, every decision contributes to the overall efficiency. We’ve seen how even seemingly small changes, like implementing blanking panels or activating server power management features, can lead to significant energy and cost savings.

For businesses in Massachusetts, New Hampshire, and Rhode Island, partnering with an experienced provider like AccuTech Communications is key to navigating this complex landscape. Since 1993, we’ve been helping commercial clients design, build, and optimize their data center infrastructure, ensuring it meets their performance needs while embracing the principles of energy efficiency. We understand the unique climate considerations of our region and can tailor solutions, from advanced airflow management to free cooling strategies, that deliver tangible benefits.

Let’s work together to transform your data center into a green giant, securing your digital future while reducing your environmental footprint and operational costs.

For more information about our data center services and how we can help you build and operate truly energy efficient data centers, please visit our website.